Design Lab

Research and development of technologies for learning

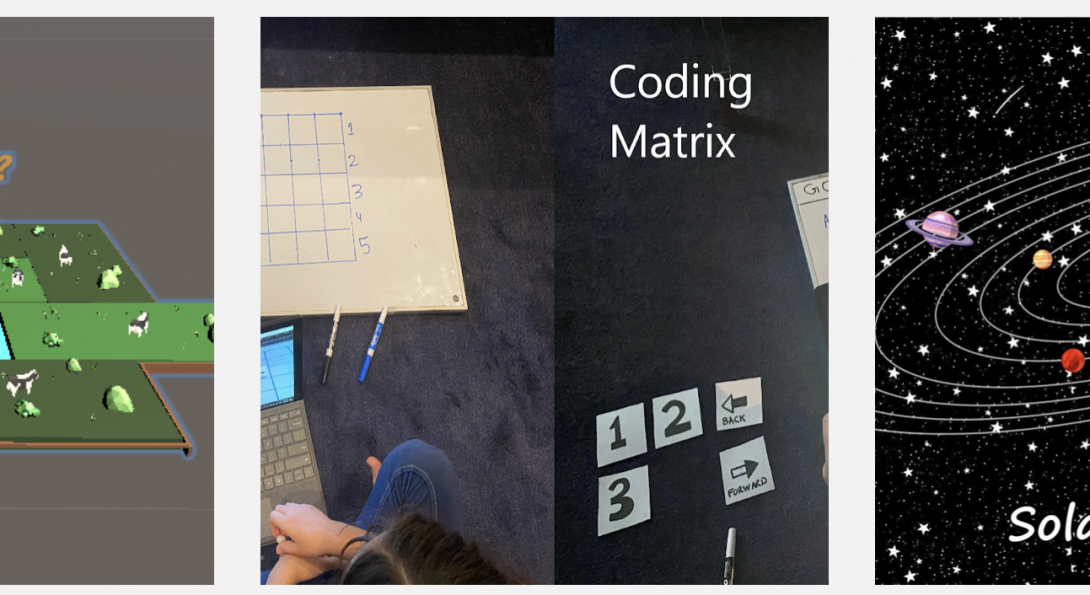

Sample work produced by students enrolled in LSRC 594, a graduate course offered jointly by the University of Illinois at Chicago’s (UIC’s) Learning Sciences Research Institute (LSRI) taught by Brenda Lopez Silva and UAM-Azcapotzalco’s Graduate Program in Design.

Course Projects designed for the course webpage

THE LAB: Heading link

-

About the lab

Asking questions is one of the best ways to practice a curious mindset— questions that challenge assumptions, inspire others, open up a broader context, and cause reflection.

What can we do?

• Identify technological needs by thinking and questioning

• Build prototypes to learn and teach

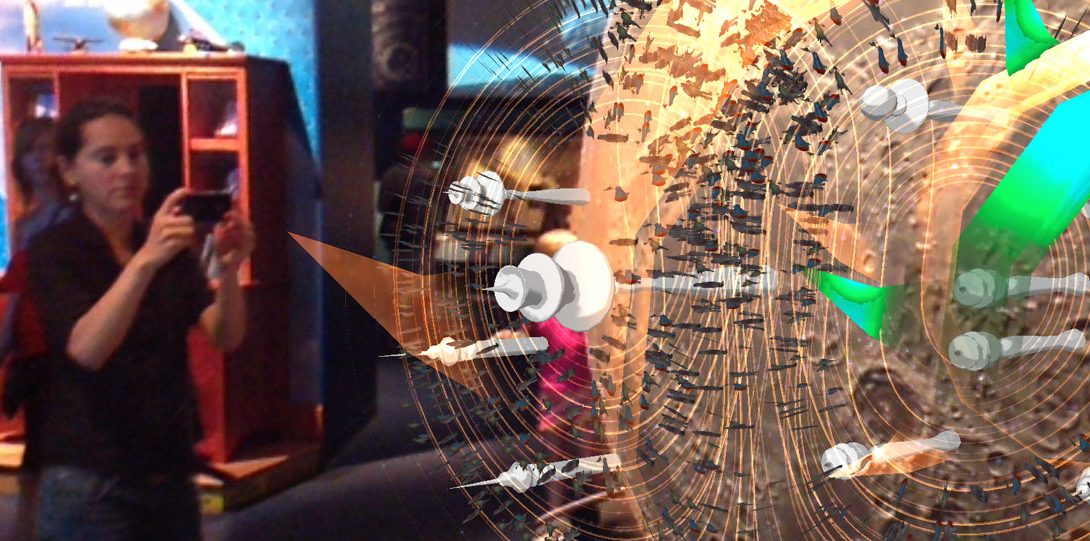

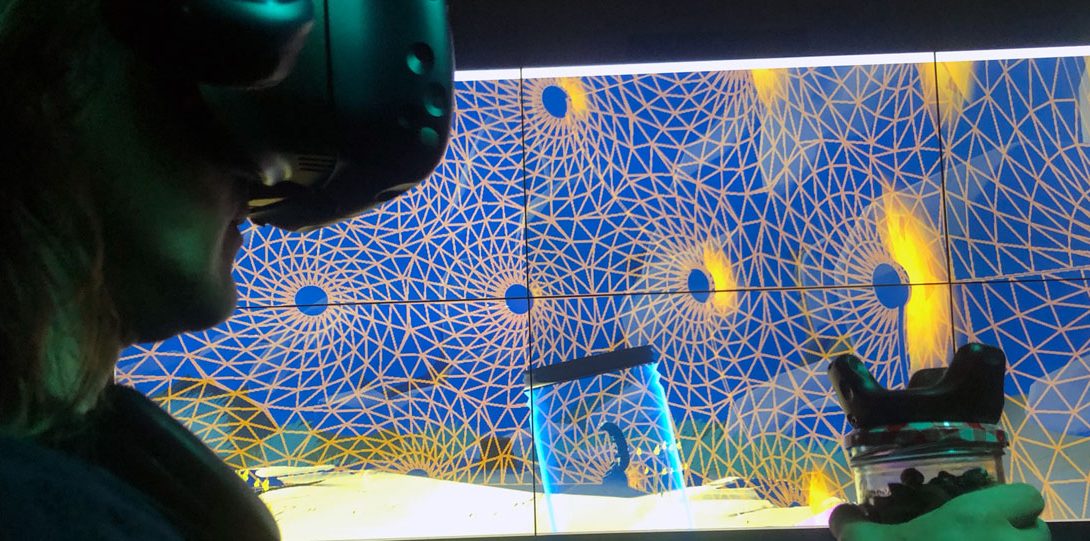

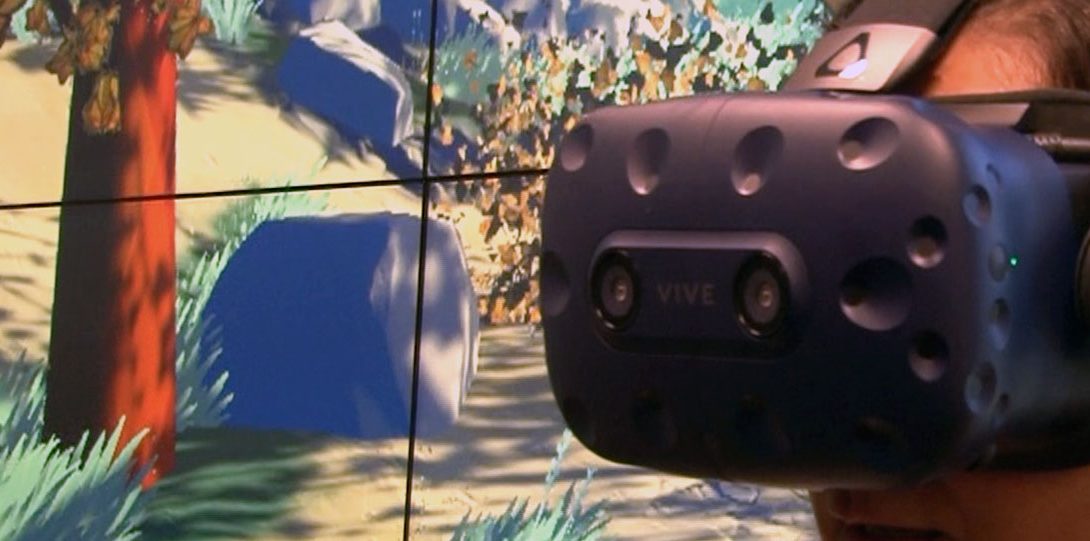

• Build opportunities to engage learners• Research XR technologies AR/VR Visualizations/Large scale displays Tangible technologies Embedded technologies Eye tracking and EEG

-

Who can be involved

Any UIC faculty, staff or student interested in learning about and working with these technologies can take advantage of the resources of the Realization Lab.

Additionally, we’re actively seeking to collaborate with individuals from other institutes of higher learning, museums, from corporate settings, and nonprofits.

-

Current technologies

We have a series of technologies ready to develop and research the design of learning environments in formal and informal settings.

Currently we work with schools to develop AR, VR and XR curricular materials to teach middle school students problem-based projects that scaffold STEM concepts.

Currently our lab is equipped with:

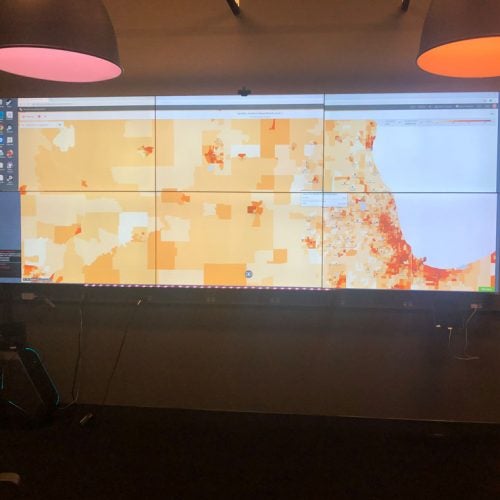

SAGE2 Visualizations/Large scale displays

Tangible technologies such as vive trackers, Arduino, Estimotes

Embedded technologies

Eye tracking

VR/AR/XR

Learning Technologies Heading link

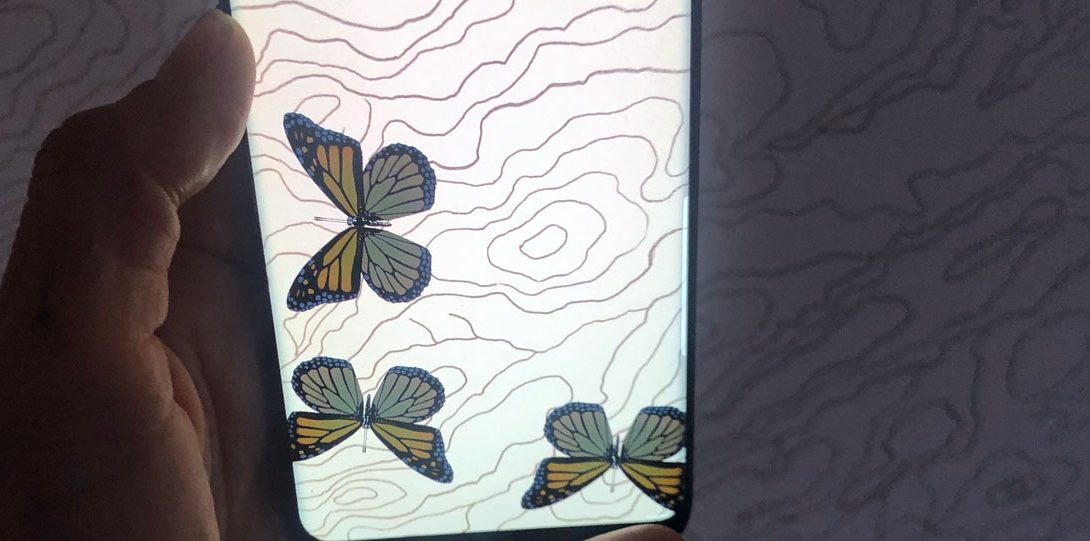

Monarch Butterfly Flight Heading link

Here’s a demonstration of a VR environment created to enhance student understanding of the challenges faced by monarch butterflies as they make their way to Mexico in migration season. The project involves multiple technical considerations to explore ideas of mixed reality and user interaction.